February 24, 2017

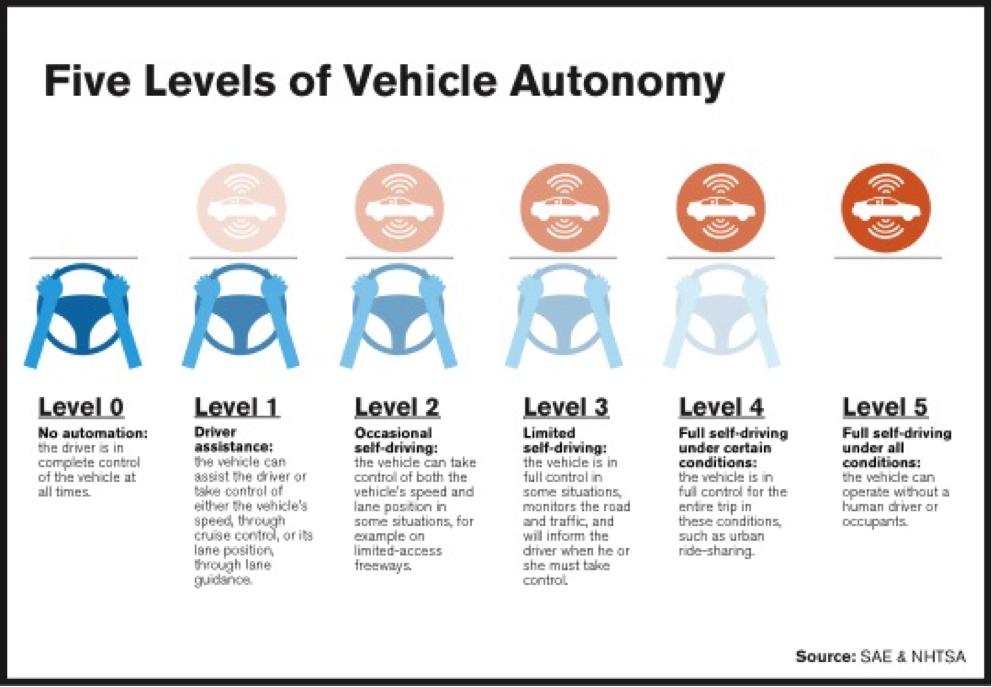

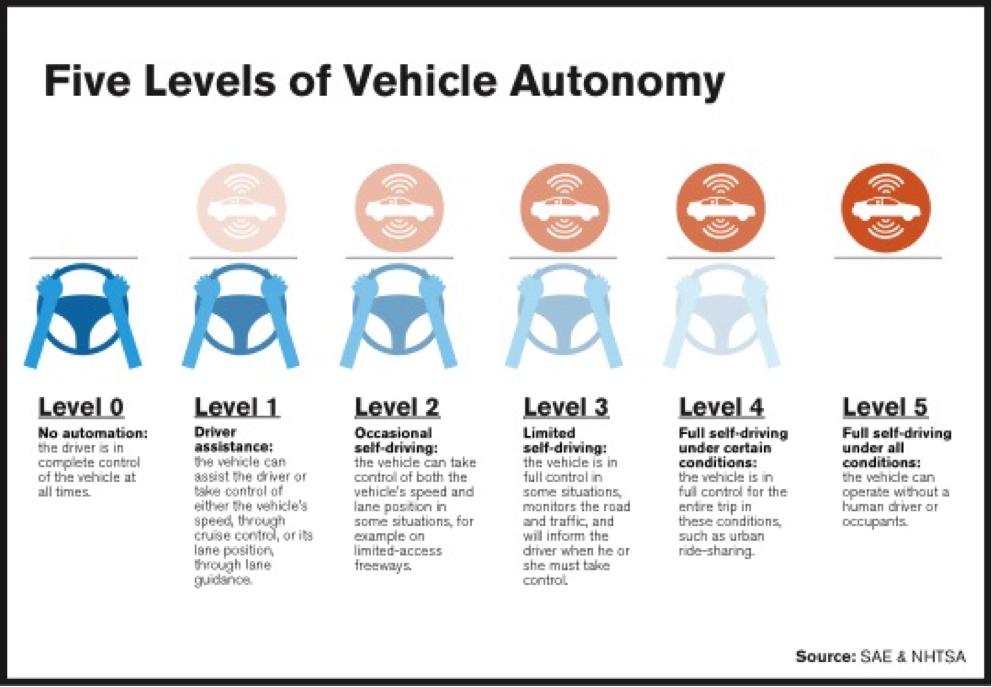

When it comes to describing automated vehicles, the exact definition is actually a sliding scale. In 2014, the Society of Automotive Engineers (SAE) created a classification of six levels and supporting definitions. This system, since adopted by federal regulators, serves as a guide to clarify the roles of human drivers and computer system in an increasingly automated world. But these classifications are posing problems about how to assign responsibility in the case of a crash.

In most cases, the assignment of responsibility – and therefore liability – is straightforward.

For example, Level 1 automation, which includes cruise control that exists on most cars, the human driver is clearly responsible for driving the vehicle. The opposite is undisputed at the other end of the scale: in Level 4 or 5 automation, the driver is not responsible for intervening and taking back responsibility in any case.

The problems arise in Levels 2 and 3, where the automated driving features can maneuver the vehicle in most instances, but require the human driver to take over at a moment’s notice.

In Level 2, where the system conducts both steering and acceleration/deceleration functions, the driver is expected to take over at any point when the system does not work. For example, if the system does not detect the vehicle that is pulling out in front of you, it is your responsibility to take control immediately and avoid the crash.

In Level 3, the vehicle’s driving system is monitoring the environment, but if it detects a scenario that it cannot navigate, it warns the human driver and control is immediately transferred away from the system. In both of these cases, the vehicle suddenly becomes a “hot potato.”

Driving is a mentally-demanding task. Monitoring and scanning a roadway while traveling at high speeds requires focus, attention, and a healthy dose of short term memory, even for routine driving situations.

A recent study indicated that the average person needs at least 17 seconds to regain full focus of a roadway environment before they are truly ready to regain control of a vehicle. While this study has its shortcomings, the findings clash with the expectation of Level 2 and 3 responsibilities.

Recent observations of how humans act behind the wheel of Level 2 vehicles, such as Tesla’s Autopilot feature, indicate that it is easy to lose focus. Ford Motor Company recently found their engineers routinely falling asleep during testing, and no amount alarms appeared to keep the trained professionals alert.

Even worse, the current structure is handing the hot potato back to the human in a complex and potentially dangerous situation that they may not be prepared to handle.

This presents a conundrum for the industry. The system driving functions perform remarkably well in many cases. Tesla’s track record indicates that its automated system drives the vehicle much safer than humans.

Given their high level of performance, humans will inevitably trust the computer-driven system in Level 2 and Level 3 vehicles as they become more prevalent and reputable. This is probably a good thing for everyone; just look at the 35,000 annual traffic deaths on American roadways in 2015 and know that humans are notoriously bad drivers.

But that does not mean that it is fair to expect humans to safely take over when the system hands off the hot potato.

The SAE levels of automation, and the corresponding assignment of liability, expect that humans can and should be trusted to pay attention to the driving environment in Level 2 and 3 automation.

However, it might be worthwhile for the government, the industry, and policymakers to consider how to better address the hot potato problem. The safety benefits from partial automation could be significant, but the expectation for constant human attention might be unrealistic.

It is unclear whether better driver training, more time for human takeover, or other actions are needed.

Nonetheless, it is likely that the hot potato problem will need to be addressed proactively. Otherwise, when unavoidable future crashes of Level 2 or Level 3 vehicles comes to court, case law could upend the current system.